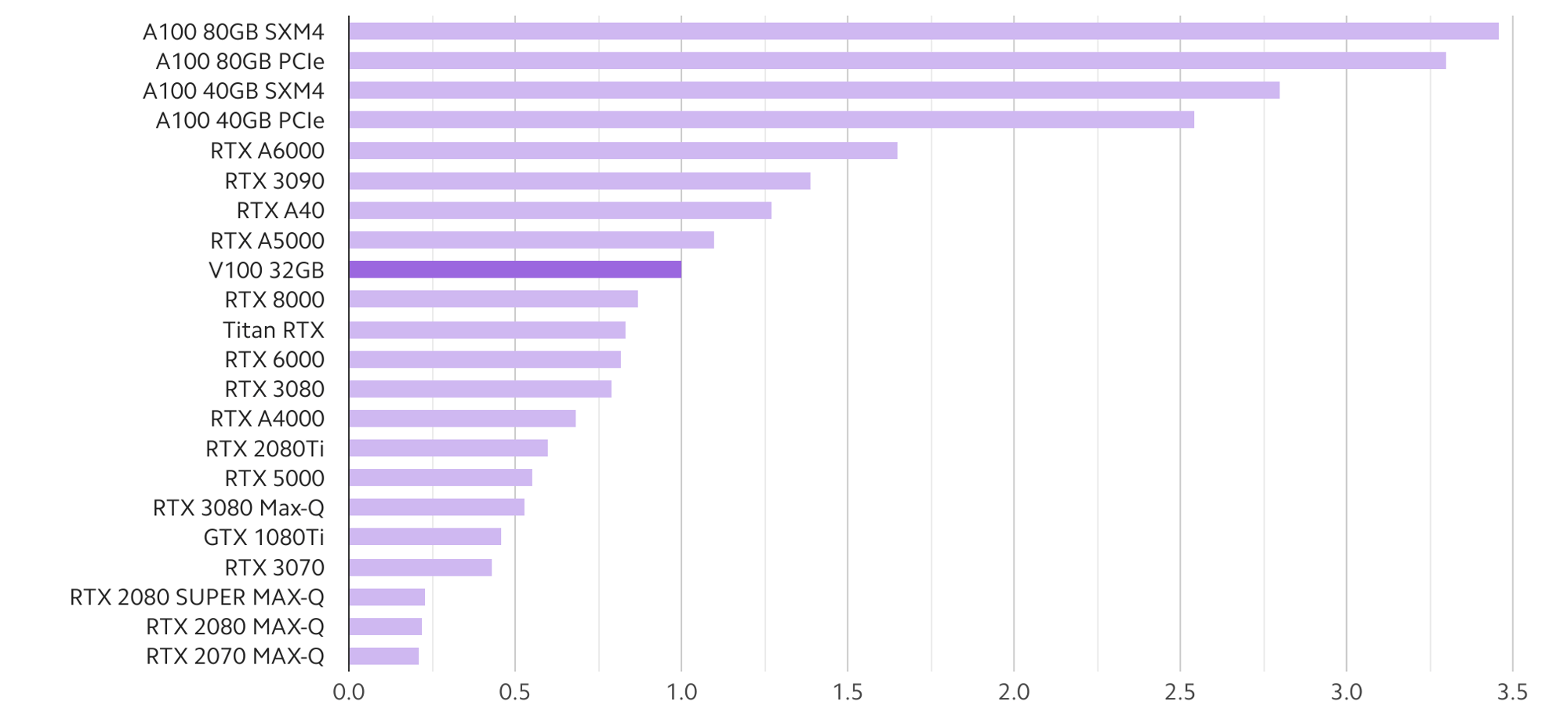

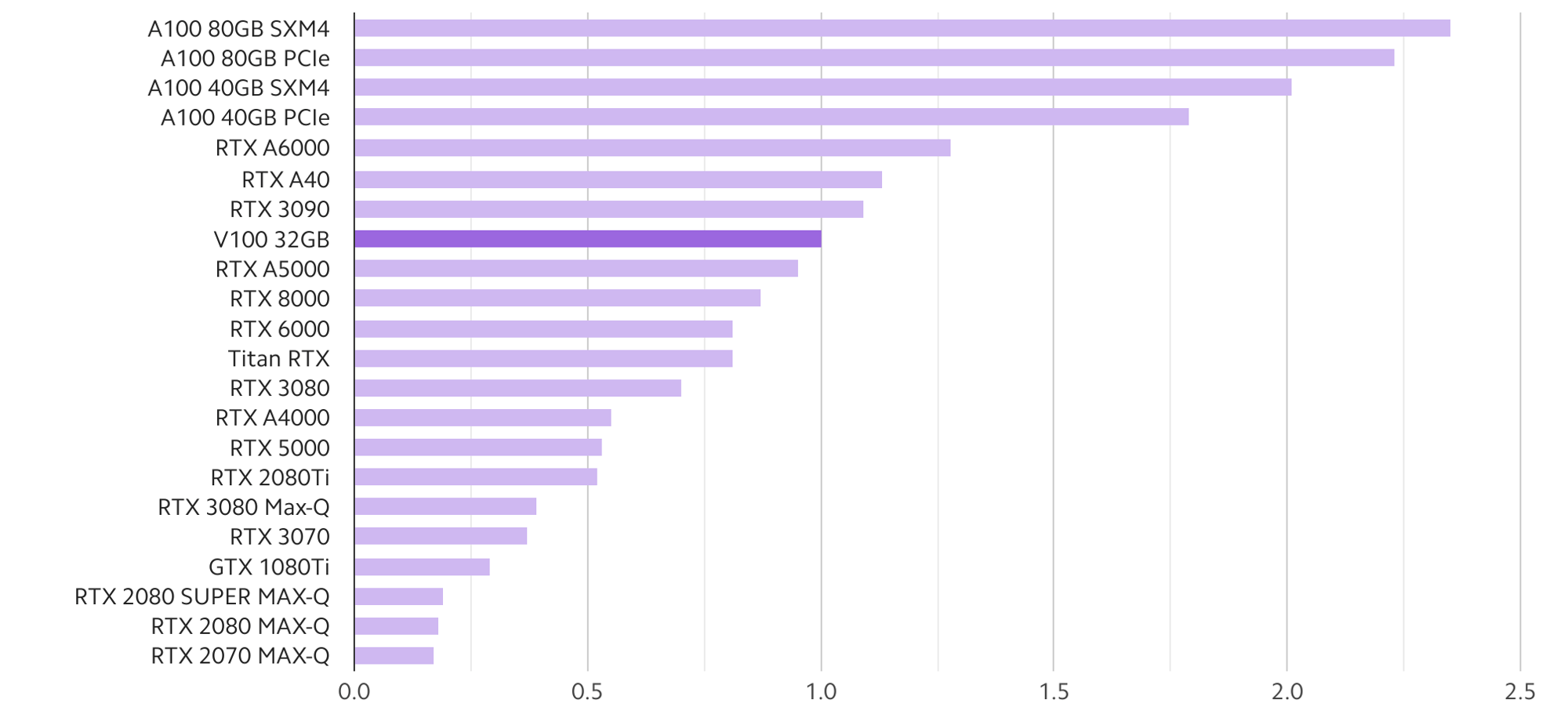

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

Sharing GPU for Machine Learning/Deep Learning on VMware vSphere with NVIDIA GRID: Why is it needed? And How to share GPU? - VROOM! Performance Blog